|

Boltzmann Distribution |

| |

Classical Particles |

|

Let's give a quick look to one of the deepest and most useful insights in thermodynamics.

In order to start simple, I will first go back to the good old times times when quantum theory and so on had not yet been

discovered, and particles like atoms, molecules and so on where at best "classical" particles or mass points -

just very small.

Classical physics deals with those mass-points by using classical

mechanics, going back all the way to Newton. |

|

|

As long as your "particles" - a cannon ball, a stone, the earth and

the sun, a hydrogen atom - could be described as a "points" positioned at the center of gravity, all is well.

You could precisely account - in principle - for the motion of the sun around the earth, or the movement of balls on the

pool table, even when the particles occasionally hit each other. The only problem was that math or computers were not evolved

enough to really let you do the calculations. There is a big difference between writing down a bunch of equations and actually

solving them. But even if you couldn't do the required math, there wasn't a sliver of

doubt that the solution, if obtained by somebody else, would tell you precisely what will happen. |

|

|

Now make your pool balls much smaller, increase their number to 1024, and

allow them to move in three directions instead of just two. There was no way then, and

there is still no way now, to calculate anything by following the movement of each and every ball. |

|

Of course, a pool game like this does not

exist, so why worry? Because systems like this do exist under different names. They

are called, for example: - the air in the room you're breathing at this very moment.

- crystals, like iron or silicon.

- your sword.

- you.

All those systems consist of a large bunch of atoms = particles that can move, more or less restrictedly, in three

directions. Even the atoms in crystals or in your body can move a bit: they vibrate in three directions. |

|

|

Dealing with systems like that (and you realize now that this happens a hell more often then

dealing with systems that can be described by just a few mass points) necessitates to look at statistical

parameters of the particle properties and their doings; things like averages

or standard deviations.

The branch of physics dealing with that is called statistical

thermodynamics, and that part of physics, together with quantum theory, turns chemistry into a branch of physics. |

|

|

What kind of statistical properties am I talking about? At least two of those properties are

well known to all of us, even to those who couldn't even spell "statistics":

- The (unordered) average energy of a particle in a system of many. The common word

for that is temperature.

- The average momentum of a particle in a system of many. The common word for that

is pressure.

|

|

So far so easy. If we now go one step deeper, we don't just look for averages

but for the distributions of the parameters in question.

|

|

|

Averages are fine but do they not tell you all that much about your system. You could get

an energy average of "5" for a system with 100 particles if, for example:

- 99 particles have a energy of "0" and one has the value 500.

- All have energy = 5.

- 50 have energy = 6, and the other 50 energy = 4.

- You get the point, pick your own example.

|

|

|

There are plenty of other ways or distributions of the

energy values that give the same average. Some distributions are more orderly than others; the one with "all have energy

= 5" certainly has a high degree of order.

That's obviously where the second

law and entropy comes in. It demands categorically that out of the large number

of possible distributions that give the same average, only those that comply with the second law will really occur in nature.

That tells us what to do. Calculate energy and entropy for all possible distributions, derive the free enthalpy from

that and see which distributions gives you the lowest value. That will be the one Mother Nature will implement.

The

only problem is that going through the whole program of calculating entropy and free energy, finding the minimum, and so

on, isn't all that easy, even for the most simple case of vacancies in a crystal. |

|

Enter Ludwig Boltzmann.

He (with some help from others) simplified everything to a just amazing degree.

"You are looking at a (classical)

system, any system, where the available energies are known", he says, "and you want to know how many particles

have the energy E in a system with N0 particles that is

in equilibrium at the temperature T? Well, here is the answer:" |

| | |

| |

|

| | |

|

|

|

This is good for all cases where the energy E considered is substantially larger

(let's say at least 0.1 eV) than E0, the smallest possible energy always set at E

0 = 0 eV.

There are some minor details to consider if one goes for full generality but that is

of no interest here. |

|

The Boltzmann distribution is a rather simple but extremely

powerful equation. The exponential term is called "Boltzmann factor".

|

| | |

|

| | |

Application of Boltzmann Distribution to Diffusion |

|

Now let's see what the Boltzmann distribution can do for us. First, we notice

that with its help we can write down the vacancy concentration, that took us a lot of

effort to derive, just so. All we need to do is to reformulate the problem. |

|

|

Whenever an atom in a crystal "has" the energy EV = formation

energy of a vacancy, it will "somehow" escape and a vacancy is formed. The "somehow" doesn't need to

worry us. In equilibrium, whatever was needed to be done to achieve it (here: get the atom out), has been done in one way

or other, we wouldn't have equilibrium otherwise.

So how many atoms = vacancies do we have at the energy of EV,

the formation energy of a vacancy? Use the Boltzmann distribution from above and you get |

| | |

|

|

|

|

| | Vacancy concentration from Boltzmann distribution |

|

| |

|

|

|

That's exactly what we got stomping through a lot

of tricky math! Isn't that something? |

|

|

Thinking a little bit about all that, we realize that the Boltzmann factor

simply describes the probability for some particles that an event needing

the energy E will occur.

That is the best way to interpret the Boltzmann factor, and that will be very

helpful right below. |

|

Now let's go for something else. We wonder how often a vacancy makes a jump, or,

more precisely, how often one of the neighboring atoms jumps into the vacancy. Here is the link to a picture and the other

stuff concerning this issue, which is of overbearing importance

to sword making (and about anything else). |

|

|

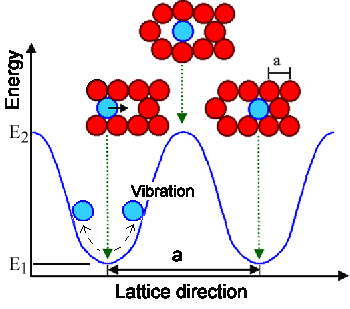

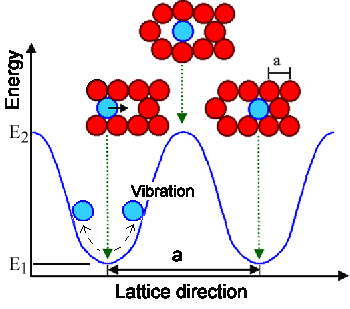

In a somewhat more abstract way the situation is as follows: |

| | |

|

|

|

|

| What happens when an atom jumps into a vacancy |

|

| | |

|

|

|

The blue atom (as all the other atoms) vibrates about its average position with a typical vibration frequency of n

» 1013 Hz as schematically indicated.

The blue line indicates the potential

energy of the atom. Only if the atom has enough energy (= large vibration amplitude) to overcome the energy barrier between

the two positions, can it jump into the vacancy, ending up in a neighboring position. In the figure the height of the energy

barrier is E2 – E2 = EM; a quantity that

is typical for a given crystal and called migration energy

EM. |

|

So how often does an atom next to a vacancy jump into the vacancy? Easy. How often

do you jump across a hurdle with a certain height? It is simply the number of attempts per time unit times

the probability that a try was successful.

For the atom the number of tries is just its vibration frequency n (that is how often it runs up the barrier per second). Multiply with the probability that it

has the required energy EM (= Boltzmann factor for that energy). In math shorthand

we have |

| | |

|

|

|

| r |

= jump rate = n · exp – |

EM

kT |

|

|

| | |

|

|

|

How many atoms in total will jump per second? Well, only

one of the atoms bordering a vacancy gets a chance for a jump. So the number or rate

R of all atoms jumping per second it just the number of vacancies or NV

= N0V · cV = N0 · exp–(EV/kT)

times the rate of jumps for any of those atoms, or |

| | |

| |

R

N0 |

= n · exp – |

EM

kT |

· exp – |

EV

kT |

= n · exp – |

(EM + EV)

kT |

|

|

| |

|

|

|

(R/N0) · 100 would be the percentage of the atoms in

the crystal that jump per second. If we want to be absolutely precise, we have to make some extremely minor adjustments

to this equation, but the essence of all this is: |

| |

| |

| |

We have diffusion in crystals covered!

|

|

| | |

|

|

I could go on and on about what you can do with the Boltzmann distribution. It

certainly is one of the most important relations in Materials Science and there are plenty of equations that contain a Boltzmann factor.

I won't. I rather give you a very brief and supercilious glance on what

happens for real particles. The Boltzmann distribution, after all, is only valid for

classical particles that do not really exist but are only approximations

to the real thing. |

| | |

|

| |

Boltzmann Distribution and "Real"

Particles |

|

Once more: There is no such thing as a classical particle that follows Boltzmann statistics. Sorry. Classical particles, it turns out, are just

approximations to real particles. |

|

|

As you (should) know, there are many different kinds of real

particles out there. As you also know, there are many flavors of "ice cream" out there - but just two basic

kinds: "cream" and "sorbet". Same thing for wine; you have read or white. People, including extremely

strange guys like (insert your choice), come always as male or female.

While there

are many kinds of particles, including very strange stuff like "naked bottom quarks" and simple things like protons,

neutrons, electrons, atoms, photons, positrons, muons, ..., they also come in just two

basic kinds: |

|

|

So what is a (quantum mechanical) "state"? It is simply one

of the solutions of the Schrödinger equation,

the fundamental equation that replaces "Newton's laws" in quantum mechanics, for a given problem. The problem

could be the bunch of electrons careening around in a metal, or a lot of atoms vibrating in a crystal, or anything else

you care to formulate.

For general problems like that there are always infinitely

many solutions - but that is nothing new. If you calculate for a classical particles what kind of velocities or energies

they could have, the solution of the problem says: "infinitely many; any

you like are possible" (there are no restrictions of velocities with only "Newton"!). The real

problem in somewhat more advanced science / math is always to find the particular solutions,

out of many, that apply to a particular sub group of the problem. |

|

In quantum mechanics, we have infinitely many solutions to the question above

as in classical mechanics, but not all velocities and energies are allowed. Allowed

energies, to give a (schematic) example, might be E = 1, 2, 3, .. but never E = 1.2

or E = 3.765. |

|

|

To stay simple, let' assume for the moment that every state = special solution of the Schrödinger

equation has exactly one energy associated with it, and that every state has a different energy.

That allows us to look in a simple way on quantum

distribution functions. We do that separately for Bosons and Fermions. |

|

1. Distribution function for Bosons or Bose-Einstein

statistics. |

|

|

All we need to know is:

i) The exact equation is somewhat different from the Boltzmann

statistic as given above.

ii) In most practical cases the mathematically

simpler Boltzmann statistics is a good approximation to the Bose-Einstein statistics.

This is as it should be. In both

cases an arbitrary number of particles could have the same energy. In particular, all particles could

have the lowest energy, and at very low temperatures all particles will have that lowest

energy. |

|

|

I hear you. "What exactly is then the difference between a classical particle, that you

claim does not exist, and a Boson? It looks as if they do pretty much the same thing".

Well, yes - but there is a difference. Classical particles we can always distinguish in principle. We can, so to speak, attach a number, paint them in different colors,

or give them a name to which they respond. No matter how small they are, there is always something smaller (your classical

particles divided into 1.000 smaller ones, for example), that you can use to make some kind of label.

Real particles, no matter if they are bosons or fermions, we cannot

distinguish. There is no way to figure out if the particular electron that just produced some current in your solar cell

is the same one that did it 5 minutes ago. And it is not that we just lack the means to do so. It is an intrinsic or inalienable

property of real particles that they are indistinguishable. The universe subscribes to communism: all particles (of one

kind) are exactly equal and indistinguishable. No proton will ever be richer, taller, healthier, more beautiful, smarter,

or (insert your choice of properties) than all the other ones. |

|

|

If you think about it a for a few years, you start to realize that this is really, really

weird. But that is the way the universe works and that's why there is a certain (if often small) difference between classical

particles and Bosons. |

|

2. Distribution function for Fermions or Fermi-Dirac

statistics. |

|

|

Now we have a huge difference to classical particles. It's best to look at a basic figure

to see that. |

| |

|

|

|

|

| Comparison of Boltzmann and Fermi Distribution. |

|

| |

|

|

|

Shown is the number of particles at a certain energy for

the Boltzmann case, and the probability that a particle is found at a certain energy

for the Fermi case.

We need two different scales because on just one scale one of the two curves would disappear in

an axis and become "invisible". |

|

|

In the Boltzmann case we have a hell of a lot of a particles

with energies at or close to the minimum energy of the system (always at E0 = 0 eV).

The number "1020" on the scale has no specific meaning and only illustrates "a hell of a lot".

If the temperature is decreased, the curve gets steeper; at T = 0 K, it is identical with the axis |

|

|

In the Fermi case we have a probability of 1 for

lower energies that a particle "has" that energy or, as we say, that the place at some allowed energy is occupied

by one of the fermions of the system. This goes up to very high energies since all particles need to occupy some place,

and so far only one particle is allowed per energy level. At T = 0 K the curve would be a rectangular box.

The

particles of the system occupy as many energy levels as are needed to accommodate them all. Occupation ends only around

some special energy called Fermi energy

EF, where at T

= 0 K the last particle finds its place. Places above the Fermi energy then are empty.

At finite

temperatures, the Fermi distribution "softens" around the Fermi energy as shown. A few particles have higher energies

then the Fermi energy, and some places at lower energies are unoccupied. That produces some disorder or entropy, needed for nirvana or thermal equilibrium since the second

law still applies. |

|

|

For most applications, only the "high-energy tail" of the Fermi distribution is

important. This part can be approximated very well with the (much simpler) Boltzmann distribution as shown. In other words:

High energy fermions / electron behave pretty much like classical particles. |

|

And now we know (sort of) under what kinds of circumstances real particles might

be approximated by classical particles. This is great because the math for classical particles is so much easier than that

for real particles. But that is the only reason why we use the Boltzmann distribution

and so on. |

|

For the finish, let's look at some of the most important equations

for electrons; our most important fermions: |

|

| |

| |

| n(E; EF, T) =

|

D(E) · f(E; EF,T)

· DE |

| |

|

f(E; EF, T) =

|

1

|

| exp |

æ

è |

E – EF

kT |

ö

ø |

+ 1 |

| | |

|

|

|

| |

|

|

| Fundamental Equations for Electronics |

f(E; EF, T) = Fermi distribution.

n(E; EF, T) = concentration of electrons at energy E

D(E) = "density of states". |

|

| |

|

|

Those are two of the few fundamental equations for all of solid state electronics.

They are at the root of almost everything - from the simple conductivity of a copper wire to the transistors and so on in

microchips. And they are far simpler than there looks (a bit like Wagner's music that is far better than it sounds according

to Mark Twain). Let's see: |

| |

|

f(E; EF, T), the Fermi-Dirac distribution, is a function

of the energy E, with Fermi energy EF and temperature T as parameters. The Fermi distribution is a universal function,

it is valid for all fermions everywhere and everywhere. The Fermi energy, however, is

a kind of material constant and thus describes some property of a given material.

The "shape" or graph of

the Fermi distribution is simple, it looks as shown above. |

|

|

Now we must overcome our "one electron - one energy" restriction from above. In

all real systems, for example the system of the electrons in a piece of silicon (Si),

there are always several states that happen to have the same energy. Since electrons only must have different states, we

can have as many electrons with same energy as there are states to that energy. The number of states with the same energy

we call "density of states" D(E).

The number

or density (number per volume) of electrons within some small energy interval DE

is then simply given by multiplying the number of places there (= DE · DE)

with the probability f(E; EF, T) that a place is occupied. That is what the first

equation specifies. It also gives us the density of electrons in semiconductor that we can "work with" an thus

the most important parameter for all semiconductor devices. |

|

Looking at all of this in a general way, we see how technology emerges out of

science:

- The Fermi distribution f(E; EF, T) is a universal (= valid in the whole universe)

and rather simple function. It expresses a basic property of the universe and thus rather deep science.

- The density of states D(E) is material specific and typical for the perfect

crystal. It is always a rather complicated function, hard to calculate but not too difficult to measure. It expresses basic

electronic properties of an ideal crystal and cannot be changed.

- The Fermi energy EF, while linked to the density of states, is sensitive to crystal defects. It therefore expresses properties of a real crystal

and can be changed.

- The temperature T is "the agent of change". It changes the

Fermi distribution and the Fermi energy to some extent.

|

|

|

Where does that leave you when you want to make an electronic device, implying that you must

have the "right" electron density n?

Well, provided you know what your device is supposed to

do, you go through these steps:

- Define the density of states you need. In other words. Pick the best suited semiconductor.

- Manipulate the Fermi energy in all or in parts of your material (= semiconductor) by introducing the right defects at

the right places. Most people call that procedure "doping".

- Use temperature to achieve proper distributions of the doping defects (= substitutional impurities).

|

|

|

Easy. Well, not quite. There are a few other things you need for making devices, like insulators

and conductors, besides your semiconductor. Unfortunately, the "defect engineering" required to adjust the Fermi

energies, is not all that simple. It can be done, however, as the computer you are using right now amply demonstrates. |

| | |

|

© H. Föll (Iron, Steel and Swords script)